Deploying machine learning models has always been a struggle.

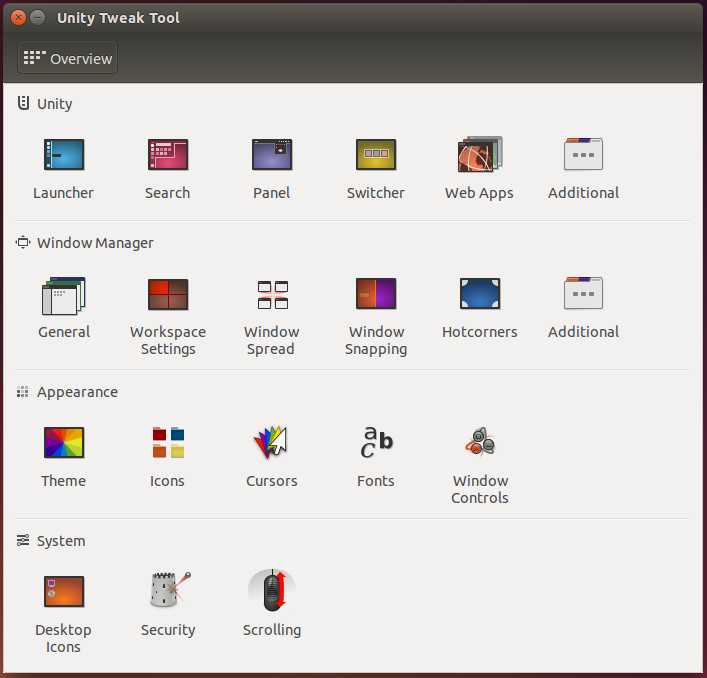

Most of the software industry has adopted the use of container engines like Docker for deploying code to production, but since accessing hardware resources like GPUs from Docker was difficult and required hacky, driver specific workarounds, the machine learning community has shied away from this option.

With the recent release of NVIDIA’s nvidia-docker tool, however, accessing GPUs from within Docker is a breeze, and we’re already reaping the benefits here at indico. In this tutorial we’ll walk you through setting up nvidia-docker so you too can deploy machine learning models with ease.

Before we get into the details however, let’s talk briefly about why using Docker for your next data science project may be a good choice. There is certainly a learning curve for the tools in the Docker ecosystem, but the benefits are worth the effort.

Read the complete article here.